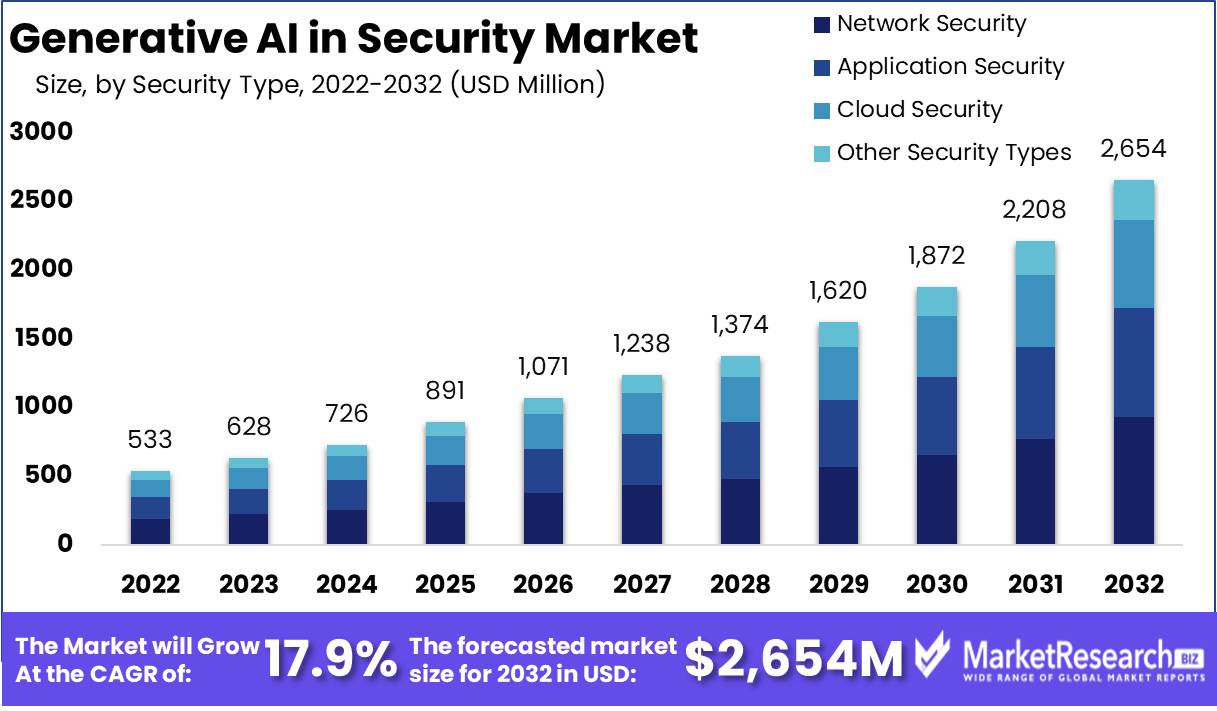

Generative AI in Security Market to Witness Positive Growth at 17.9% CAGR & USD 2654 Mn Valuation In 2032

Page Contents

Market Overview

Published Via 11Press : Generative AI in Security Market size is expected to be worth around USD 2654 Mn by 2032 from USD 533 Mn in 2022, growing at a CAGR of 17.9% during the forecast period from 2022 to 2032.

Generative Artificial Intelligence (AI) has seen rapid development over recent years, and its applications in security markets are growing increasingly prominent. Generative AI refers to using algorithms and models to generate content resembling real data such as images, videos or text that closely resembles it; its applications span multiple industries including security.

Generative AI is being deployed within the security market to enhance threat detection, cybersecurity and surveillance systems. One primary use case of this form of artificial intelligence (AI) is creating realistic synthetic data sets – data produced artificially that mimics real-world scenarios – using AI algorithms for creating synthetic threat scenarios which allow security companies to train and test their systems safely under controlled environments – this helps increase accuracy and robustness of security solutions.

Generative AI also plays an essential role in image and video analysis for security purposes, helping generate high-resolution images from low-quality or incomplete inputs, aiding in the identification and tracking of individuals or objects. Furthermore, law enforcement agencies can employ generative models to generate facial composites based on witness descriptions to assist them with suspect identification.

Another application of generative AI in security is anomaly detection. Anomaly detection involves identifying patterns or behaviors that diverge significantly from normal, potentially signaling security threats. Generative models can be trained on massive amounts of normal data to learn their underlying patterns, then used to detect anomalies real time for faster response to suspicious activities or events.

However, along with the potential advantages, generative AI in security market also raises privacy and ethical considerations. Generative AI could potentially result in deepfake content which can be misused for malicious purposes like impersonation or spreading misinformation; security companies and policymakers must therefore devise clear frameworks and guidelines that address these challenges to ensure responsible use of this generative AI technology.

Request Sample Copy of Generative AI in Security Market Report at: https://marketresearch.biz/report/generative-ai-in-security-market/request-sample

Key Takeaways

- Generative AI is revolutionizing the security market by creating synthetic data sets for training and testing security systems.

- It enhances image and video analysis by generating high-quality content from low-quality inputs, aiding in identification and tracking.

- Generative models assist in creating facial composites based on descriptions, supporting law enforcement in suspect identification.

- Anomaly detection benefits from generative AI by identifying patterns deviating from the norm in real-time, enhancing security response.

- Privacy and ethical concerns arise due to the potential misuse of generative AI for creating deep fake content.

- Responsible and ethical guidelines must be developed to address the challenges and ensure the proper use of generative AI in security.

- The advancement of generative AI brings improved accuracy, robustness, and effectiveness to security solutions.

- Striking a balance between harnessing the potential of generative AI and addressing privacy and ethical concerns is crucial for a secure future.

Regional Snapshot

- North America and especially the United States is an emerging market for generative AI applications in security. This region features numerous tech companies and research institutions driving innovation within this space, and widespread adoption of generative AI for threat detection, cybersecurity, and surveillance purposes is becoming more prevalent each day. Furthermore, U.S. government investments heavily in research and development initiatives support further advancements within security-based industries.

- Europe displays an interest in Generative AI applications for security purposes. Countries like the UK, Germany and France all possess established security industries with strong cybersecurity initiatives in place. European Union regulations such as General Data Protection Regulation (GDPR) can have an influence over adopting Generative AI by emphasizing privacy and data protection concerns.

- Asia Pacific region displays significant potential for the application of generative AI to security applications. Countries like China, Japan and South Korea have all demonstrated outstanding progress with AI-powered security innovations; China investing heavily in research and development while its security industry utilizes it in various forms including facial recognition systems and surveillance systems.

- Middle Eastern and African nations have increasingly turned to AI-powered security solutions like surveillance and critical infrastructure protection technologies as their response to terrorist or cyber threats increases. Key adopters include United Arab Emirates, Saudi Arabia and South Africa as they invest heavily in protection from such threats.

- Latin America has witnessed an upsurge in the adoption of artificial intelligence-powered security solutions over recent years, particularly within Brazil, Mexico and Argentina. Countries across Latin America are adopting such systems both for public safety reasons as well as fraud detection or border control purposes.

For any inquiries, Speak to our expert at: https://marketresearch.biz/report/generative-ai-in-security-market/#inquiry

Drivers

Technological Advancements

Rapid advances in generative AI technologies, including enhanced deep learning algorithms, increased computational power, and the availability of large-scale datasets are driving their use in security markets. These advances enable more accurate and realistic generation of synthetic data sets as well as image/video analysis capabilities and anomaly detection abilities resulting in more secure solutions.

Rising Security Threats

As security threats such as cyber-attacks, terrorism, and criminal activities become more sophisticated, they necessitate advanced security solutions. Generative AI offers the potential to enhance threat detection, surveillance capabilities, and anomaly detection capabilities and keep pace with emerging threats.

Demand for Increased Surveillance Capability

Demand for improved surveillance has propelled the rise of generative AI in the security market. Generative models are capable of producing high-resolution images and videos from low-quality inputs, providing better identification and tracking of individuals or objects – this technology is especially invaluable to law enforcement agencies and organizations that require robust surveillance systems to guarantee public safety and security.

Industry-Specific Applications

Generative AI has many uses across various industries, such as healthcare, finance, transportation and defense. Each industry faces distinct security issues that generative AI provides tailored solutions. For instance, in healthcare, it can generate synthetic medical data for training and testing healthcare systems while protecting patient privacy and data security.

Restraints

Privacy Concerns

Generative AI technology poses serious privacy risks when applied to security applications. Generating realistic synthetic data could potentially result in deep fake content which can be used for impersonation, fraud, or violating individuals' privacy if used unethically and responsibly – ethical use of generative AI is therefore key in order to address such privacy issues and maintain public trust.

Ethical Implications

Generative AI technology presents significant ethical concerns. Deepfake technologies crafted using this type of artificial intelligence (AI) may be used to spread misinformation, influence public opinion or deceive individuals – striking a balance between innovation and responsible use is vital in order to avoid such consequences and mitigate potential harm caused by misapplication of this technology.

Data Access and Quality Issues

Generative AI models require large and varied datasets for accurate training, which may prove challenging in the security realm due to limited availability or restrictions on sensitive information. Acquiring high-quality representative data is critical in training generative AI models accurately; any shortage thereof could reduce the effectiveness of security solutions based on this technology.

Computational Resources and Complexity

Generative AI models, particularly those employing deep learning techniques, can be computationally intensive and require substantial resources to train and deploy successfully. This could prove prohibitive for organizations with limited computational infrastructure or budgetary restrictions; furthermore, their complexity necessitates special expertise for development and implementation, potentially hindering its adoption within smaller organizations.

Opportunities

Market Growth

The market for generative AI security solutions is poised for significant expansion over the coming years, as organizations recognize AI-powered security solutions more regularly. As organizations realize the value of such technologies, there has been an explosion of demand for them; creating opportunities for companies operating in this market to develop innovative generative AI solutions to meet evolving security requirements.

Collaboration Research and Development Project

Collaboration among academia, industry and government entities presents unique opportunities for research and development of generative AI for security purposes. Joint efforts can produce novel algorithms, methodologies and frameworks that improve security systems while partnerships foster knowledge transfer as well as the application of generative AI to real security scenarios.

Improved Threat Detection and Response Capability

Generative AI offers an opportunity to dramatically strengthen threat detection and response capabilities. Utilizing synthetic data and anomaly detection techniques, security systems can more accurately and quickly detect and respond to threats in real-time, which leads to faster incident responses, reduced false positives, and overall improved security posture.

Ethical and Privacy Considerations for Businesses

Organizations have the chance to stand out in the market through ethical AI deployment. Prioritizing privacy and creating accountable practices allows them to build trust with customers and stakeholders while adhering to relevant regulations gives them a chance to establish themselves as industry leaders in ethical AI deployment.

Take a look at the PDF sample of this report: https://marketresearch.biz/report/generative-ai-in-security-market/request-sample

Challenges

Adversarial Attacks

Generative AI models may be vulnerable to adversarial attacks from malicious actors who deliberately alter inputs in order to deceive or produce incorrect outputs from the system. Adversarial attacks on security applications using generative AI could compromise reliability and effectiveness by leading to false positives or negatives in threat detection or surveillance applications.

Lack of Interpretability

Generative AI models, particularly deep neural networks, often lack interpretability, making it hard to comprehend why certain outputs are generated. This issue poses challenges in security applications where transparency and explainability are crucial elements in decision-making processes and maintaining accountability.

Legal and Regulatory

Rapid development of generative AI for security purposes has outstripped legal and regulatory frameworks to govern its deployment, creating challenges to ensure responsible and ethical deployment of this form of technology. Governments and regulatory bodies must address these hurdles by creating frameworks which balance innovation, security and privacy concerns in an equitable fashion.

Bias and Fairness

Generative AI models may inherit bias from their training data, leading to biased outputs or discriminatory behavior that is harmful in security context. Unfair profiling or targeting can have devastating repercussions for certain individuals or groups. Ensuring fairness in these AI models requires constant effort for continuous algorithmic improvements that ensure fairness.

Market Segmentation

Based on Security Type

- Network Security

- Application Security

- Cloud Security

- Other

Based on Service

- Professional Services

- Managed Services

Based on the Deployment Mode

- Cloud-Based

- On-premises

Based on End Users

- Retail

- BFSI

- Manufacturing

- Healthcare

- Other

Key Players

- IBM Corp.

- Intel Corp.

- NVIDIA Corp.

- Securonix Inc.

- Skycure Inc.

- Threatmetrix Inc.

- Other Key Players

Report Scope

| Report Attribute | Details |

| Market size value in 2022 | USD 533 Mn |

| Revenue Forecast by 2032 | USD 2654 Mn |

| Growth Rate | CAGR Of 17.9% |

| Regions Covered | North America, Europe, Asia Pacific, Latin America, and Middle East & Africa, and Rest of the World |

| Historical Years | 2017-2022 |

| Base Year | 2022 |

| Estimated Year | 2023 |

| Short-Term Projection Year | 2028 |

| Long-Term Projected Year | 2032 |

Request Customization Of The Report: https://marketresearch.biz/report/generative-ai-in-security-market/#request-for-customization

Recent Developments

- In 2022, Google Cloud introduced its Generative AI for Cybersecurity service using synthetic data generation through Generative AI to train machine learning models for security applications and provide organizations with enhanced cyberattack detection and prevention capabilities. This offering helps organizations enhance their detection and prevention abilities against cyber attacks.

- In 2023, Palo Alto Networks acquired Demisto, a generative AI company. Demisto's platform automates security tasks like incident response and threat hunting with AI; making this acquisition invaluable to organizations looking to save time while strengthening their security posture.

- In 2023, IBM Security introduced its Cloud Pak for Security platform featuring a generative AI component to assist organizations in strengthening their cyberattack detection and prevention capabilities. This technology uses synthetic data creation to train machine learning models – aiding organizations in strengthening their ability to detect cyber attacks quickly.

FAQ

1. What is Generative AI and How Can it be Implemented in Security Market?

A. Generative AI refers to the application of algorithms and models to generate content that closely resembles real data. Generative AI has become an indispensable part of security market operations to improve threat detection, cybersecurity, and surveillance systems as well as generate synthetic data while improving image/video analysis as well as detect anomalies real-time.

2. How does Generative AI support threat detection in security?

A. Generative AI enhances threat detection by producing realistic synthetic data sets that simulate various threat scenarios, providing security systems with training and testing environments in controlled environments to increase accuracy and robustness. Generative AI also has the power to analyze patterns and identify anomalies real-time allowing security systems to detect and respond more quickly to potential threats.

3. What are the ethical considerations associated with Generative AI security systems?

A. One of the primary ethical concerns relating to AI technology lies with its potential misuse in creating deep fake content for impersonation, spreading misinformation or breaching individual's privacy. Responsible and ethical usage of generative AI is therefore key for mitigating such concerns and maintaining public trust.

4. Can generative AI improve surveillance systems?

A. Yes, generative AI can significantly enhance surveillance systems. Its power to generate high-resolution images and videos from low-quality or incomplete inputs aids the identification and tracking of individuals or objects, making this technology especially beneficial for law enforcement agencies and organizations that rely on robust surveillance systems to protect public safety.

5. What are the challenges associated with implementing generative AI in the security market?

A. Some challenges facing AI include privacy concerns, ethical considerations, availability of quality training data for training purposes, computational resource requirements, adversarial attacks, lack of interpretability and legal/regulatory frameworks. Overcoming these hurdles involves taking care to address all privacy and ethical considerations as well as ensure data quality, improve interpretability, develop regulations/guidelines appropriately, as well as maintain appropriate legal/regulatory frameworks.

6. Are there specific industries that could benefit from using generative AI for security purposes?

A. Yes, various industries can benefit from using generative AI for security purposes, including healthcare, finance, transportation and defense. Each industry faces specific security challenges that generative AI provides solutions for. For instance, in healthcare, it could generate synthetic medical data for testing healthcare systems while guaranteeing privacy and protecting security.

7. Why is collaboration between academia, industry and government essential for the security of generative AI?

A. Collaboration among academia, industry, and government entities plays an essential role in driving innovation and effectively addressing security challenges. Joint efforts may result in the development of advanced algorithms, methodologies, and frameworks that improve security systems' effectiveness and efficiency; partnerships enable knowledge transfer, research advancements and the application of generative AI in real security scenarios.

Contact us

Contact Person: Mr. Lawrence John

Marketresearch.Biz

Tel: +1 (347) 796-4335

Send Email: [email protected]

Content has been published via 11press. for more details please contact at [email protected]

The team behind market.us, marketresearch.biz, market.biz and more. Our purpose is to keep our customers ahead of the game with regard to the markets. They may fluctuate up or down, but we will help you to stay ahead of the curve in these market fluctuations. Our consistent growth and ability to deliver in-depth analyses and market insight has engaged genuine market players. They have faith in us to offer the data and information they require to make balanced and decisive marketing decisions.